AI ACT IN Europe

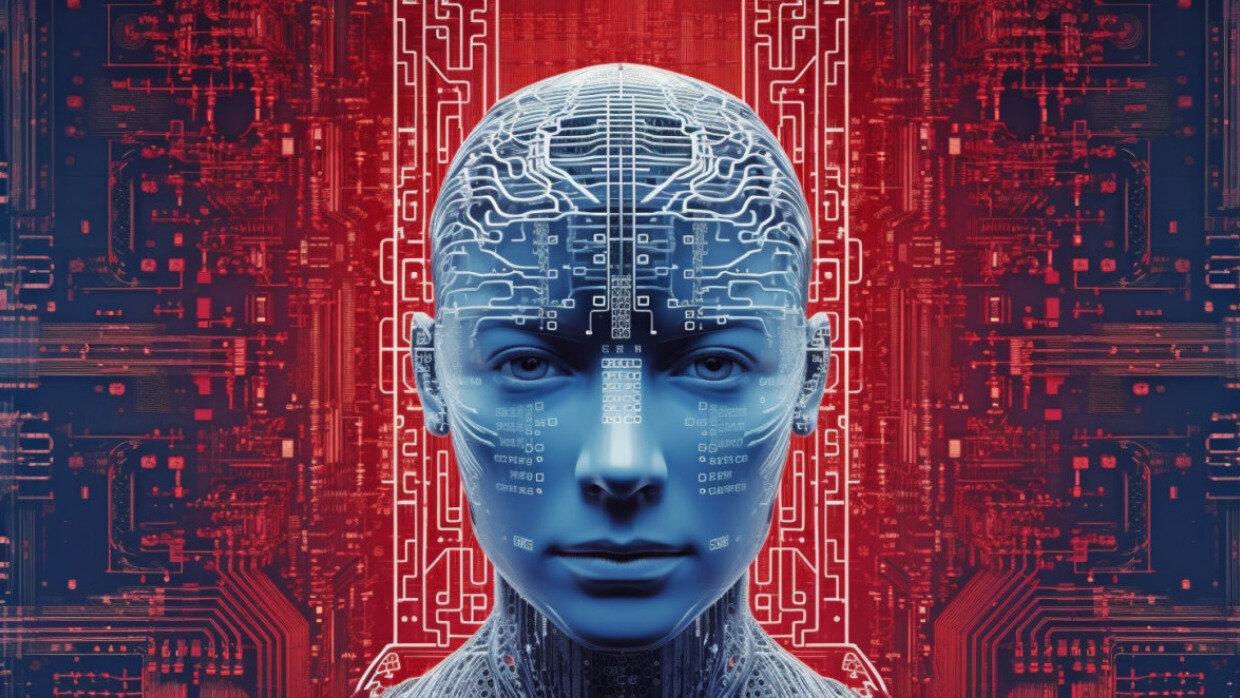

- AI risk refers to the potential dangers or negative consequences associated with the development, deployment, and use of artificial intelligence (AI) systems. As AI technology advances, it introduces both opportunities and challenges, and managing the risks is a crucial aspect of responsible AI development. Some key aspects of AI risk include: Bias and Fairness: AI systems can inherit and perpetuate biases present in their training data. This can lead to unfair or discriminatory outcomes, particularly in areas like hiring, lending, and law enforcement. Transparency and Explainability: The lack of transparency in some AI models can make it challenging to understand how decisions are made. Explainability is crucial for building trust and ensuring accountability. Security Concerns: AI systems can be vulnerable to attacks and manipulation. Ensuring the security of AI applications is essential to prevent unauthorized access, data breaches, and malicious use. Job Displacement: Automation driven by AI has the potential to replace certain jobs, leading to unemployment and socioeconomic challenges. It's important to address the impact of AI on the workforce and implement strategies for retraining and reskilling. Ethical Use: There is a risk of AI being used unethically, such as for deepfake creation, misinformation, or surveillance. Establishing ethical guidelines and regulations is crucial to prevent misuse. Unintended Consequences: AI systems may exhibit unexpected behaviors or consequences that were not anticipated during their development. Thorough testing and ongoing monitoring are essential to identify and address such issues. Regulatory Compliance: Ensuring that AI applications comply with relevant laws and regulations is critical. In some cases, inadequate regulation can contribute to ethical and legal challenges. Long-Term Impacts: As AI becomes more advanced, there are concerns about its long-term impact on society, including issues related to autonomy, control, and the potential for superintelligent systems. Addressing AI risk requires a multidisciplinary approach involving technology developers, policymakers, ethicists, and society at large. It involves implementing safeguards, ethical guidelines, and regulatory frameworks to mitigate potential harm and ensure that AI is developed and used responsibly.

-

Banned Systems: Some AI systems, like live facial recognition or those designed to manipulate people, are not allowed because they pose too much risk.

-

High-Risk Systems: AI used in critical areas like cars, airplanes, education, and justice is closely monitored. These systems have strict safety requirements to ensure they're safe and reliable.

-

Generative AI (e.g., ChatGPT): Systems like ChatGPT fall into this category. They need to be clear about the data they used for training and are not allowed to create illegal content.

-

Low-Risk Systems: Programs that automatically improve photos or videos are considered low-risk. They only need to let users know that they use AI without facing strict regulations.

Sponsored

Search

Sponsored

Sponsored

Categories

- Web Development

- Art

- Causes

- Crafts

- Dance

- Drinks

- Film

- Fitness

- Food

- Games

- Gardening

- Health

- Home

- Literature

- Music

- Networking

- Other

- Party

- Religion

- Shopping

- Sports

- Theater

- Wellness

Read More

How to Secure Your Smart Home in 2025 (Complete Guide)

Smart homes offer incredible convenience, but they also create potential entry points for...

How to Remove Malware from a WordPress Site: A Step-by-Step Guide

Discovering malware on your WordPress website can be a stressful experience, but it's crucial to...

The Next Generation of WordPress: Why the Future of the Web Still Runs on WP

When I first started working with WordPress, people used to laugh:“Isn’t that just...

WordPress Advanced Development and Business

1. Introduction

In the digital age, a strong online presence is crucial for businesses and...

Using GraphQL in WordPress: A Comprehensive Guide

The History of GraphQL

GraphQL, much like React, was originally developed by Facebook. The...